Should labelling AI-generated content on social media be an ethical consideration or an obligation for Australian companies to disclose?

In this article

Social media platforms such as Facebook and Instagram updated their AI-generated content policy earlier this year. The parent company Meta has started applying labels either when users disclose the use of GenAI tools or when it detects industry-standard AI image indicators. [1] However, the extent of industry-standard indicators is not disclosed and, therefore, businesses that use generative AI tools to assist their content creation may fly under the radar.

GenAI content policies set by social media platforms, such as Meta’s, may be an effort to align with Australia’s AI Ethics Principles, which help ensure businesses and governments practise the highest ethical standards when designing, developing and implementing AI. Currently, generative AI is regulated through legislation such as the Australian Consumer Law, the Privacy Act, and copyright law. [2]

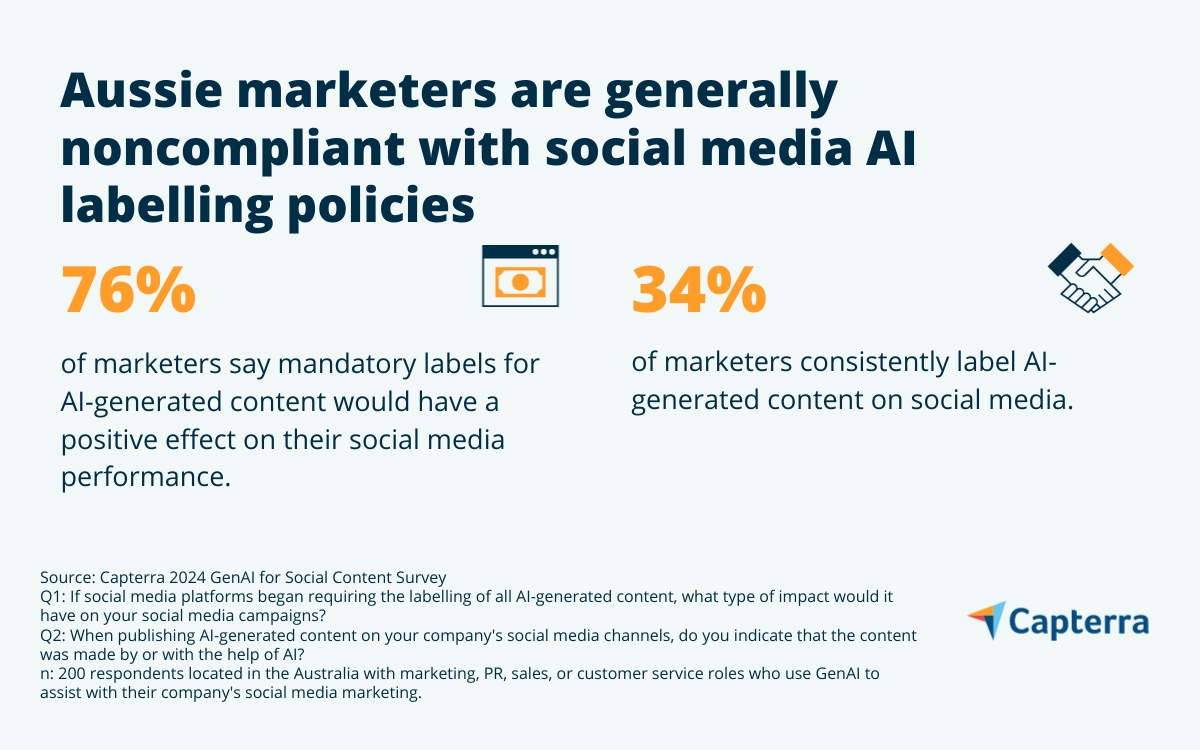

For now, few companies fully comply with social media labelling policies. According to Capterra’s survey of over 1,600 global marketers, the average Australian company uses GenAI to produce nearly half (49%) of its social media content; however, only about a third (34%) always label the AI-generated content they post to social platforms.*

While it may or may not be in the interest of businesses to label their content, brand management software can help ensure GenAI content complies with all relevant laws and guidelines.

- Only 34% of Aussie marketing professionals using GenAI for social media content creation say their companies always label AI-generated content on social media.

- 76% of respondents say mandatory labels for AI-generated content on social media would positively affect their companies’ social media performance.

- 72% of respondents are moderately to highly concerned about the risk that their companies’ AI-generated marketing content could spread harmful misinformation.

- 78% of respondents say using AI-generated content has enhanced their companies’ performance on social media.

Only around a third of Aussie marketers always label their AI-generated content on social media

In response to the rapid rise in artificial intelligence (AI) usage, the Australian government has introduced a plan to impose rules on high-risk technologies while minimising interventions in low-risk AI to allow its growth to continue. The industry minister also noted plans for labelling AI-generated content so it can’t be mistaken as genuine. [3]

However, labelling AI-generated content could have far-reaching consequences for businesses, according to research by the Massachusetts Institute of Technology (MIT), which suggests that consumers are less trusting of content bearing such labels. [4]

Marketing content posted by businesses is leveraging GenAI for its productivity gains and cost savings (and to be clear, businesses that use GenAI are seeing some benefits). Consumer perception of AI-generated content, which has received a lot of bad press due to political misuse, could have downstream effects on marketing at large.

In general, labelling efforts have been chaotic so far. On a theoretical level, experts agree that GenAI can create false advertising—see the viral Glasgow Willy Wonka event of early 2024 [5]—but they diverge on exactly how or whether labelling would protect the public. Some researchers caution that while AI labels can do good under certain conditions, questions exist as to what labels should entail, what level of AI involvement warrants a label, and how to deal with noncompliance and other inevitable drawbacks of labelling policies. [4] Currently, there is no universal AI-generated content label and no foolproof method of detecting AI-generated content.

On a practical level, the rollout of social media AI labelling policies has been scattershot and error-prone. While some platforms use emerging, but faulty, detection techniques to automatically label content that appears to be AI-generated, others rely on creators to self-report their use of GenAI.

Even in the imaginary scenario where all businesses play fair with AI labels, a self-reporting policy would still fail. Without effective detection tools, businesses that hire creative agencies or freelancers to produce their marketing content can’t be sure whether those third parties are using GenAI. In fact, an overwhelming 85% of Australian respondents whose companies outsource content creation are moderately to highly concerned that they are unwittingly receiving output created by AI.

All of this uncertainty leaves businesses in a tricky position and with little incentive to use AI labels. And though Australian respondents say AI labels are a good thing, their lack of full disclosure may indicate otherwise.

Aussie marketers say AI labels would improve social media performance but are cautious to use them

Just over three-quarters (76%) of marketers say AI labels would improve their social media performance, yet so few always indicate that their content was made by or with the help of AI.

Australians share a negative sentiment and distrust GenAI usage in news content. According to the federal government’s latest Television and Media survey of about 5,000 Australians, nearly four in five respondents who know about GenAI said their trust in a news story would be negatively impacted if they found out it had been fully produced by GenAI. As per the same study, even if the content had been GenAI-assisted, more than half still said their trust would be negatively impacted. [6]

Thus, the general perception of GenAI content among Australians may lack credibility. As a result, businesses may fear their content will be perceived negatively by the public if they fully disclose their use of GenAI tools for assistance or the creation of social media content.

Below are some further reasons Australian businesses may be expressing caution about disclosing GenAI content:

- AI slop is the new spam. AI slop refers to vast amounts of GenAI content being uploaded onto the internet, and just like spam, audiences don’t want to see slop. Businesses don’t want to be seen as contributing to the influx of mediocre GenAI content on the internet. If consumers are statistically less likely to engage with AI-generated content, then why would companies label it as such? [7]

- GenAI could lead to social media abstention. Analysts predict that by 2025, a perceived decline in social media content quality related to GenAI will prompt half of consumers to significantly decrease their use of social media platforms. Such a change would tank the return on investment (ROI) of GenAI tools, not to mention a whole host of social media marketing software investments. [8] Businesses are hoping that their AI-generated content will be interesting enough that audiences won’t know or care that it wasn’t made by humans.

- Lack of consistent labelling from competitors. About one in five surveyed Australian marketers says using GenAI has yielded a competitive advantage. Therefore, to level the playing field, AI labels should be consistent. Businesses may choose to try to beat detection rather than label their GenAI content to not have any negative connotations associated with it.

- Labelling adds a few steps to a not-quite-fully automated workflow. Doing the important work of aligning stakeholders to develop an internal labelling framework and including a labelling step in the GenAI content publishing workflow takes time that could put businesses behind their competitors.

In light of the possible reasons outlined above and the challenges Australian businesses face in labelling AI-generated content, it’s important to ask the following questions, drawn from Australia’s AI Ethics Principles, [2] to make the right decision:

- How does the labelling of AI-generated content contribute to the overall well-being of your users and broader community?

- Are there potential risks of AI-generated content that need to be communicated through labelling?

- How do you ensure AI-generated content is reliable and safe for your users?

- Are you being transparent about the use of AI in generating content?

- What accountability measures are in place to address any issues that arise from AI-generated content?

Transparency around GenAI use is imperative

All that said, being honest with customers and publishing only high-quality content is always an effective long-term strategy. If your business chooses to use GenAI for marketing, you should do so responsibly and transparently.

Here are some tips on how to approach GenAI content and labelling on social media.

- Do comply with social platforms’ stated policies on AI-generated content labelling.

- Do explore ways that AI can automate the routine tasks your human creatives do with marketing content. For instance, AI-powered grammar checkers or image editing tools can save marketers time that is better spent on complex creative tasks.

- Don’t use GenAI to replace human creatives. GenAI can produce content quickly and at scale, but that content needs human intervention before it’s published.

- Don’t publish low-quality AI-generated content—in other words, content that’s boring, uncanny or full of mistakes. Your social media audience will immediately clock that it’s AI-generated and will perceive you as inauthentic.

Meta (Instagram, Facebook, and Threads): Meta previously applied a “Made with AI” label onto posts with metadata that indicated the presence of AI-generated content. Following an outcry from photographers whose content was labelled due to their use of digital editing tools, Meta updated its label to simply read “AI info.” [9] Users can click on the label to learn more about why it was applied.

TikTok: TikTok launched a required AI-generated content label last year, warning creators that their content could be removed if they did not disclose the fact that they had used AI. It soon began testing technology that can automatically label AI-generated content. It now labels AI-generated content with “Content Credentials,” a digital watermark tech that attaches metadata to AI-generated content. [10]

YouTube: As of March 2024, YouTube creators are required to label realistic-looking content that was made using AI. However, the label is not required for certain effects, such as beauty filters or background blurring, or for “clearly unrealistic” content, such as animation. [11]

For Australian businesses, brand management software is essential when labelling AI-generated content to ensure consistency, compliance, and alignment with brand identity. As GenAI becomes increasingly integrated into marketing content creation, maintaining a clear and consistent brand voice across all digital platforms is crucial. It is also vital that GenAI content adheres to specific brand guidelines and regulatory requirements, which is particularly important in a market where consumer trust and brand reputation are key advantages.

Survey methodology

*Capterra’s GenAI for Social Content Survey was conducted in May 2024 among 1,680 respondents in the U.S. (n: 190), Canada (n: 108), Brazil (n: 179), Mexico (n: 199), the U.K. (n: 197), France (n: 135), Italy (n: 102), Germany (n: 90), Spain (n: 123), Australia (n: 200), and Japan (n: 157). The goal of the study was to learn more about the impacts of generative AI on social media marketing strategies. Respondents were screened for marketing, PR, sales, or customer service roles at companies of all sizes. Each respondent indicated their use of generative AI to assist with their company's social media marketing at least once each month.

Sources

- Meta Will Require Labels on More AI-generated Content, The Verge

- Australia’s Voluntary Ethics Principles, Australian Government, Department of Industry, Science and Resources

- Risky AI Tools To Operate under Mandatory Safeguards, as Government Lays Out Response to Rapid Rise of AI, ABC news

- Labeling AI-Generated Content: Promises, Perils, and Future Directions, MIT

- The Willy Wonka Experience’s Generative AI Debacle is Just the Start of Our Nightmarish New Advertising Reality, Fast Company

- Australians Distrust Use of AI in News Content, The Canberra Times

- Spam, Junk … Slop? The Latest Wave of AI Behind the ‘Zombie Internet’, The Guardian

- How Marketing Can Capitalize on AI Disruption, Gartner

- Instagram’s ‘Made with AI’ Label Swapped Out for ‘AI info’ After Photographers’ Complaints, The Verge

- TikTok Begins Automatically Labeling AI-generated Content, CNBC

- YouTube Adds New AI-generated Content Labeling Tool, The Verge